Some overheated prototypes of things to come. These cards are what I would call a next gen preview. In any case, then you look at ATI, and they have the HDMI audio, the DX 10.1 support and all they have to do at this point is A) Get a good price out the door, B) Make a good profit (make them cheap, which these NVIDIA are expensive to make, no doubt) and C) handily beat the 8800GTS and many of us are going to be sold.

Though we'll surely fire it up in the future once our video cards "happen to be able to run it on high" very few people are going to go out of their way $500+ for this silly title. if you had an 8800GT or whatever, you're already played this game "well enough" on medium settings and are plenty tired of it. so maybe this is a win, but I doubt too many people care. gochichi - Tuesday, Jlink You know, when you consider the price and you look at the benchmarks, you start looking for features and NVIDIA just doesn't have the features going on at all.ĬOD4 - Ran perfect at 1920x1200 with last gen stuff (the HD38GT(S))so now the benchmarks have to be for outrageous resolutions that a handful of monitors can handle (and those customers already bought SLI or XFIRE, or GTX2 etc.)Ĭrysis is a pig of a game, but it's not that great (it is a good technical preview though, I admit), and I don't think even these new cards really satisfy this system hog.While it's impressive that NVIDIA built this chip on a 65nm process, it desperately needs to move to 55nm. The problem is that returning to idle from gaming for a couple of hours results in a fan that doesn't want to spin down as low as when you first turned your machine on. If you're used to a GeForce 8800 GTX, GTS or GT, the noise will bother you. If you have a silent PC, the GTX 280 will definitely un-silence it and put out enough heat to make the rest of your fans work harder. It's not GeForce FX annoying, but it's not as quiet as other high-end NVIDIA GPUs then again, there are 1.4 billion transistors switching in there. Under load, as the GTX 280 heats up the fan spins faster and moves much more air, which quickly becomes audible. At idle, the GPU is as quiet as any other high-end NVIDIA GPU. Having high end cards idle near midrange solutions from previous generations is a step in the right direction.īut as soon as we open up the throttle, that power miser is out the door and joules start flooding in by the bucket.Ĭooling NVIDIA's hottest card isn't easy and you can definitely hear the beast moving air. Idle power so low is definitely nice to see. Yes reducing power (and thus what I have to pay my power company) is a good thing, but plugging a card like this into your computer is like driving an exotic car: if you want the experience you've got to pay for the gas. This bodes well for mobile chips based off of the GT200 design, but in the desktop this isn't as mission critical. There is an in between stage for HD video playback that runs at about 32W, and it is good to see some attention payed to this issue specifically. And it is hard to say what is more impressive, the power saving features at idle, or the power draw at load. Eventually the hardware will actually have to do something and then voltages will rise, clock speed will increase, and power will be converted into dissapated heat and frames per second. With increasing transistor count and huge GPU sizes with lots of memory, power isn't something that can stay low all the time.

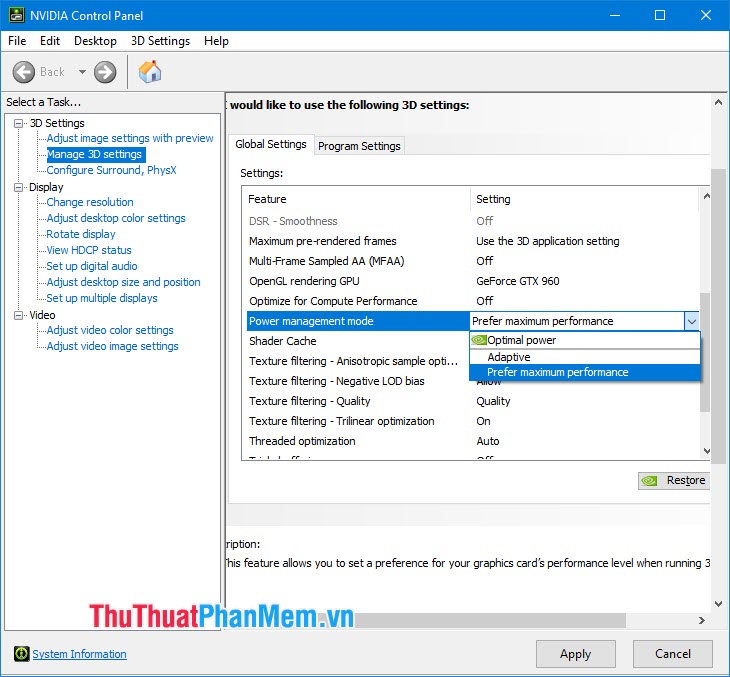

This is very similar to what AMD has already implemented. Mode changes can be done on the millisecond level. There is hardware on the GPU to monitor utilization and automatically set the clock speeds to different performance modes (either off for hybrid power, 2D/idle, HD video, or 3D/performance). These enhancements aren't breakthorugh technologies: NVIDIA is just using clock gating and dynamic voltage and clock speed adjustment to achieve these savings. As we will show below, this can have a very positive impact on idle power for a very powerful bit of hardware. To that end, NVIDIA has beat their GT200 into such submission that it's 2D power consumption can reach as low as 25W. Now it's cool (pardon the pun) to focus on power management, performance per watt, and similar metrics. Power is a major concern of many tech companies going forward, and just adding features "because we can" isn't the modus operandi anymore.

0 kommentar(er)

0 kommentar(er)